Car Tolling – A case for Edge Computing

Governments often face tight budgets on infrastructure development; car tolling is increasingly seen as the answer for raising funds¹, making it more and more prevalent. From 2008 to 2018 the total length of tolled roads in Europe increased by 23%² and tolling revenue in Europe increased by 37%³ to €31.3 bn. per year; similarly, from 2010 to 2015 the United States experienced a 63% increase in transponders and 52% more tolling revenue, resulting in $13.8 bn. in 2015⁴. On top, despite car sharing efforts, car ownership and traffic is still increasing in many countries, e.g. Germany⁵⁶, France⁷ and India⁸. Increasing amounts of traffic, devices, and data points bring current tolling solutions to their limits. Taking data to the edge in new and existing tolling solutions by adding a data persistence layer and synchronizing parts of the data can make tolling more efficient and reliable.

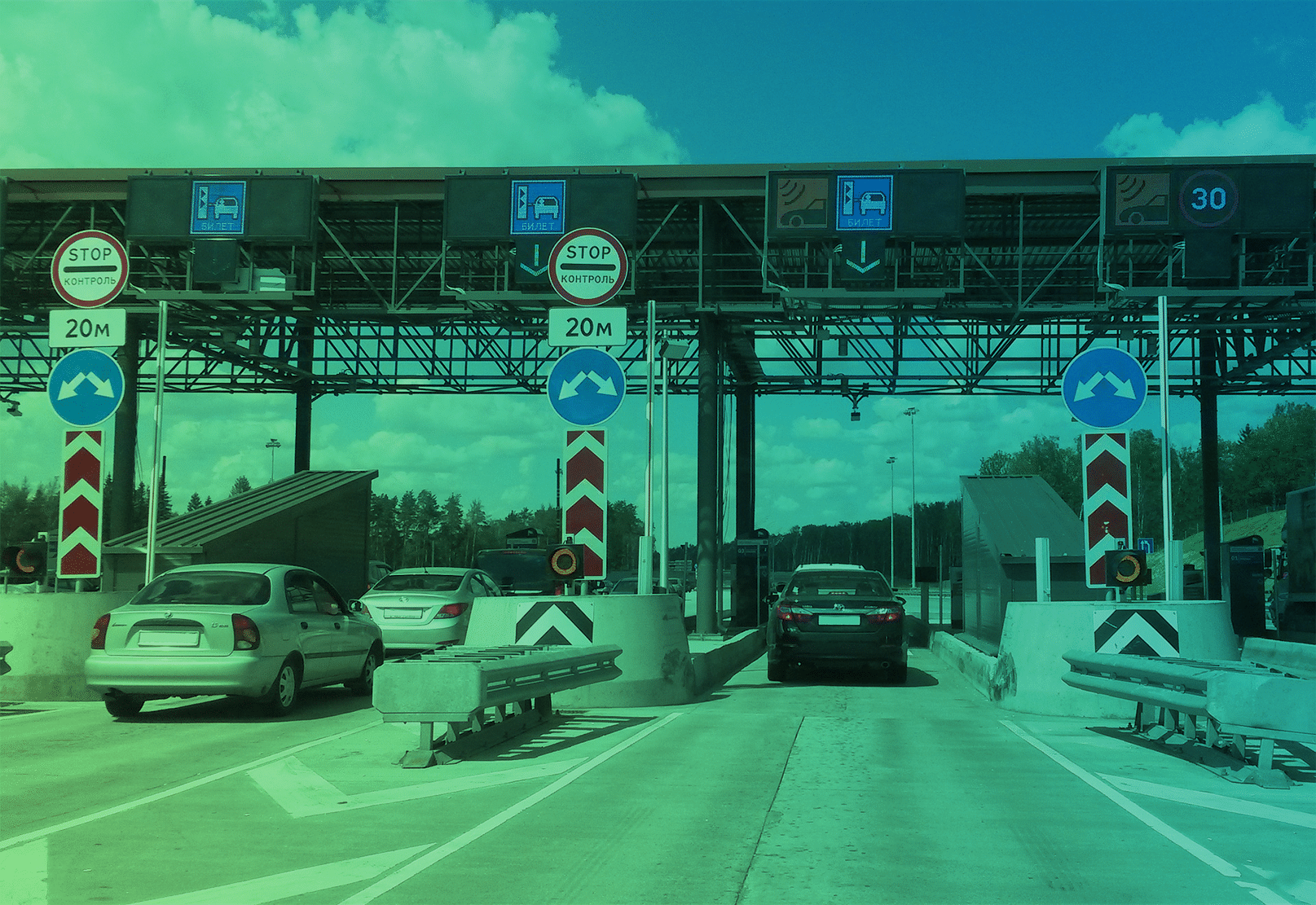

Setting the stage: a typical car tolling situation

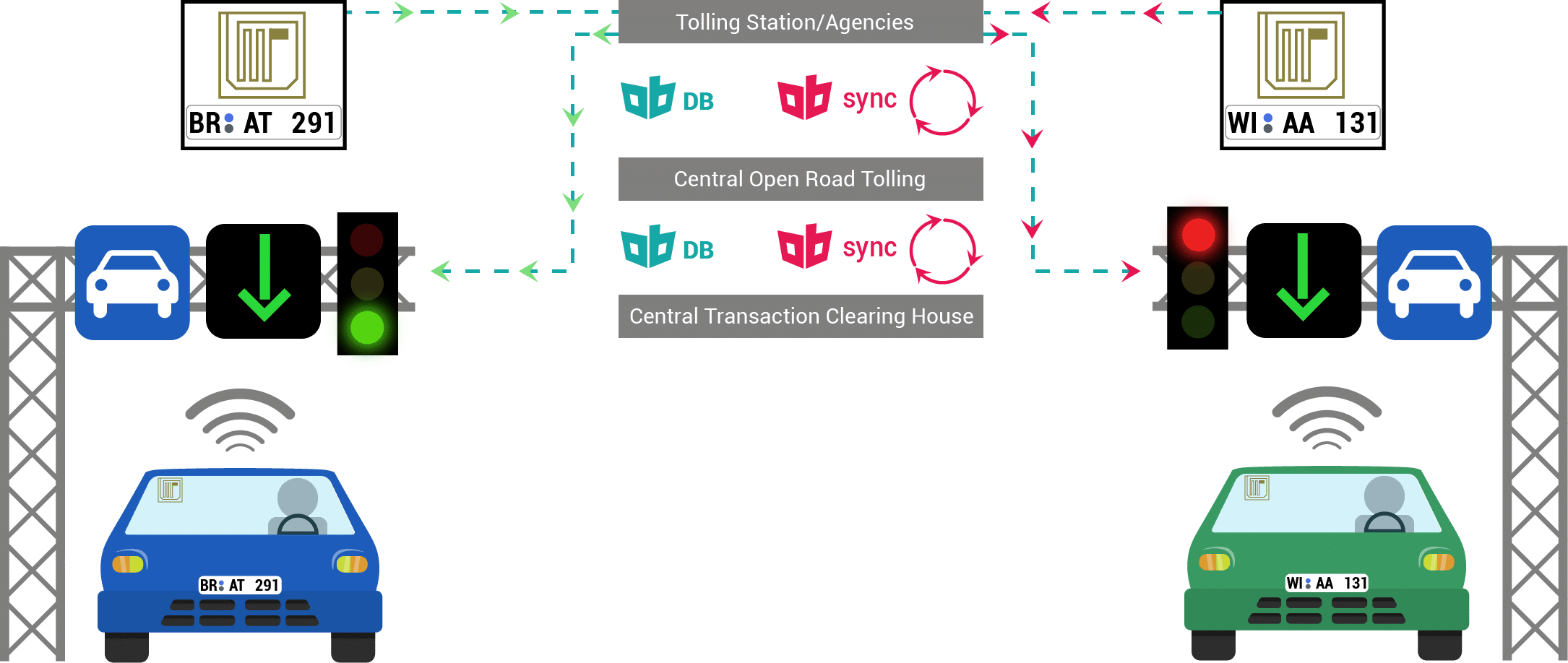

A national infrastructure company has deployed several hundred car tolling stations all over the country. These stations automatically recognize passing cars by detecting licence plates, using visual recognition or wirelessly, e.g. by receiving data from an RFID transponder in the car. In order to ensure that only eligible cars are passing through the tolling station and violators are fined, it is necessary for the tolling station software to look up the gathered vehicle information – among millions of entries – as fast as possible. If the data look-up is not fast enough, or the data on the roadsides/tolling stations isn’t up to date and in sync with the central data, the tolling station loses money.

“The importance of mobile apps is increasing for Kapsch TrafficCom so that we see ObjectBox’ edge computing database solution as an interesting future base technology for all types of mobility apps.”

Why edge computing and fast lookups are key to today’s car tolling systems

In general, modern nationwide tolling infrastructure consists of three systems: tolling stations operated by the respective agencies, central open road, also called mobile tolling⁹, and central transaction clearing houses. Within this infrastructure, all data related to violators and other operational information needs to be synchronized between these three systems in a consistent way, with as little delay as possible. If this is not the case, together with other problems, car tolling system operators are faced with high monetary losses every day.

Challenges of today’s car tolling systems

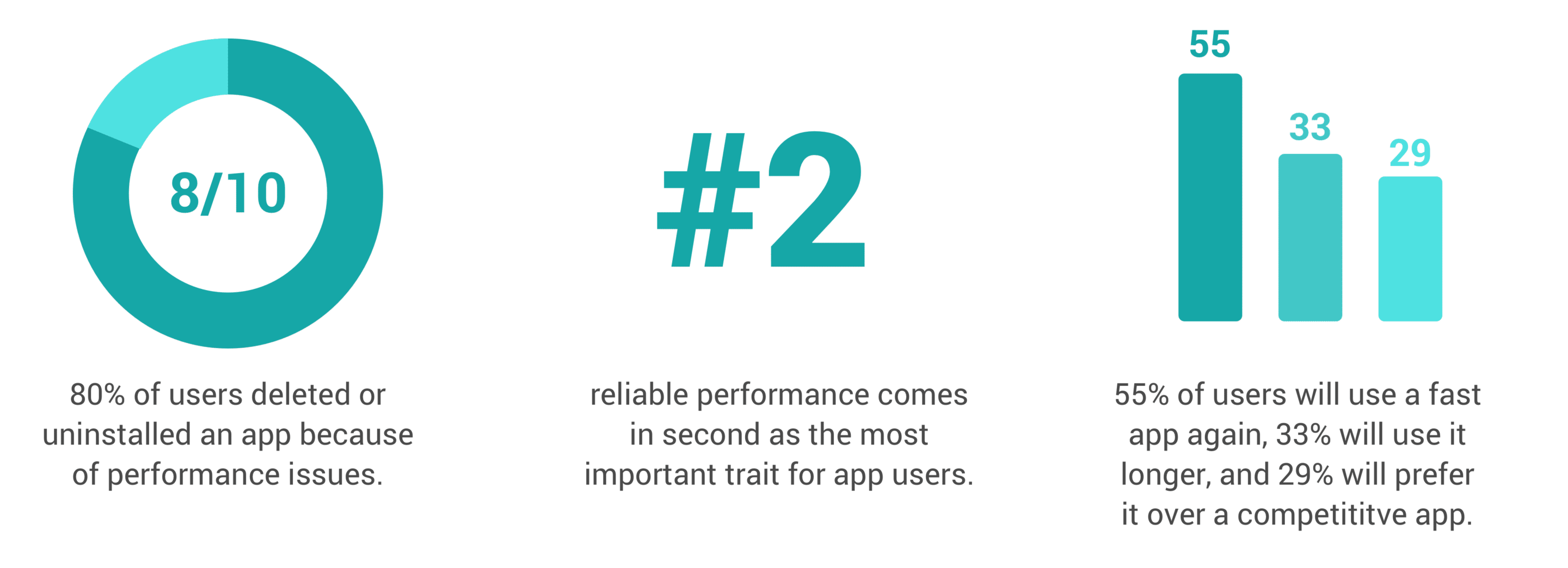

Today’s car tolling systems are based on the fundamental idea that cars do not need to stop to be checked or charged. Thus, as the cars move quickly through the scanning area, the main challenge relates to the amount of data that needs to be searched within a very short time frame. To be successful, the license plate needs to be read and looked up in a database in near real-time.

Near-realtime requirements

From a development perspective, this challenge is rooted in:

- accessing data from a remote location (speed of communication, speed of network)

- keeping data in synchronization with car tolling stations that are closer to the drivers and/or roadside units

- database speed on remote servers

- database speed on roadside units (car tolling edge devices)

- limitations of existing hardware as some systems are quite old, and rolling out new hardware is expensive

Strict uptime quarantees

Furthermore, it is possible that stations shut down from time to time, due to the weather, power outages, vandalism or simply technical failures. However, tolling providers generally need to provide strict uptime guarantees and thus service level agreements often include penalty fees in case of excessive downtime. Such events cost the providers substantial amounts of money – and data loss, i.e. undetected violators, even more so.

Privacy and legal regulations

Adding to this, privacy and legal requirements differ from country to country and increase the complexity of the systems and timings. For example, in Austria the pictures and derived license plate information may only be used for checking, but in case no violation was detected, they need to be removed in an unrecoverable manner.¹⁰ On the other hand, the data of potential violators may be stored for the sole purpose of toll collection or prosecution, but only for a maximum of three years.

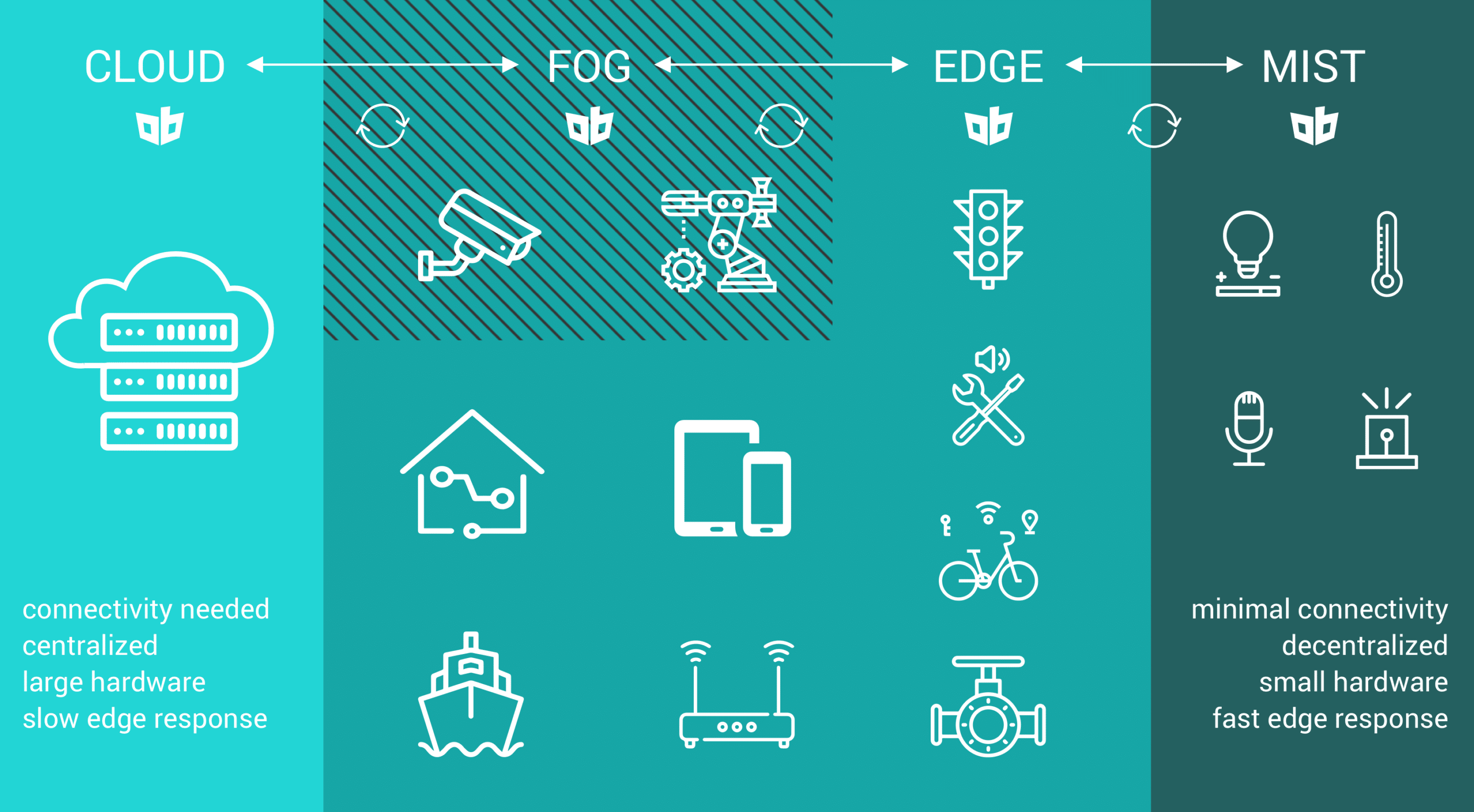

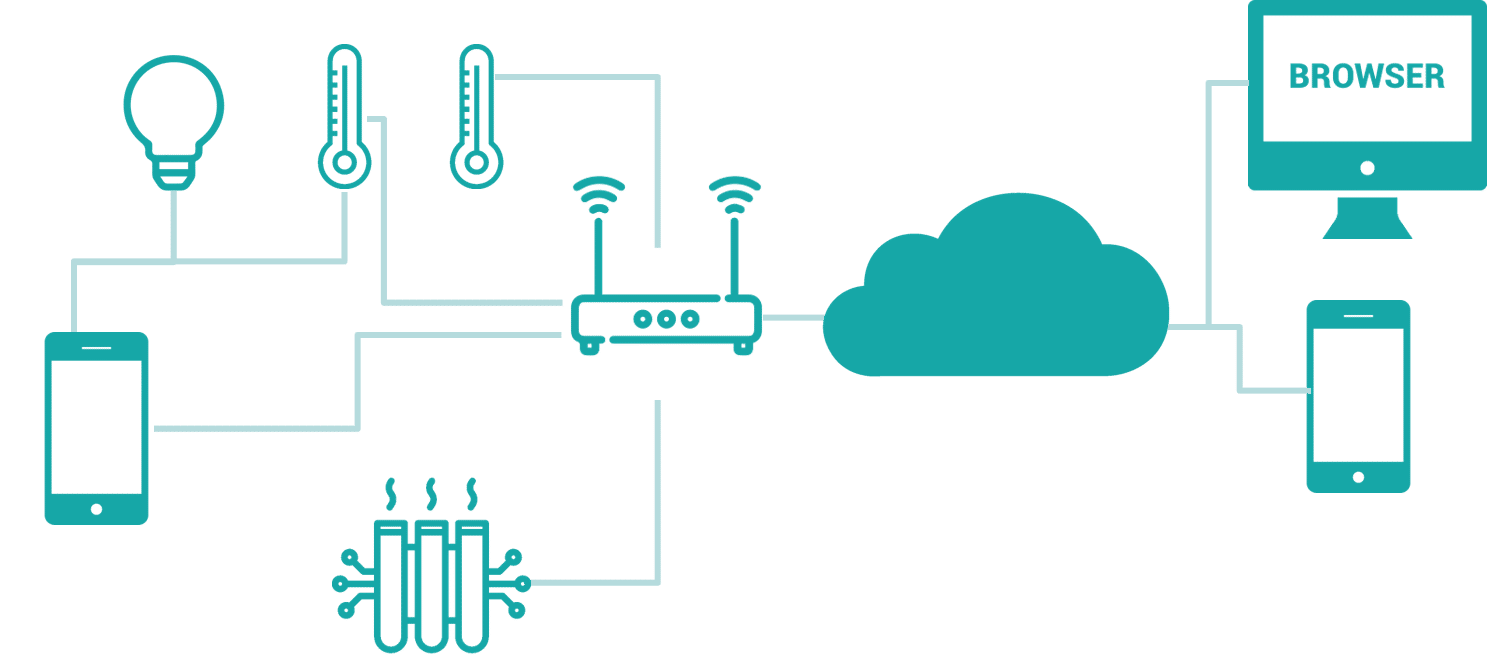

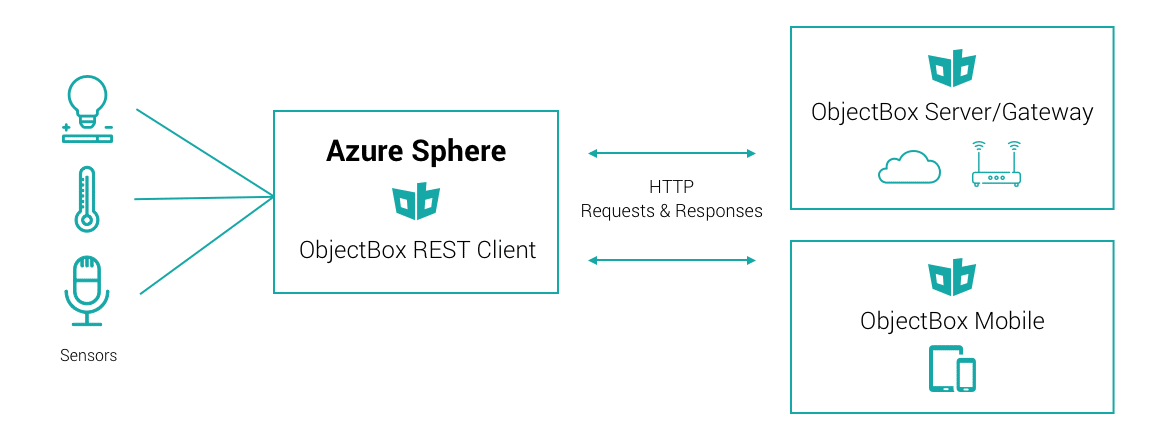

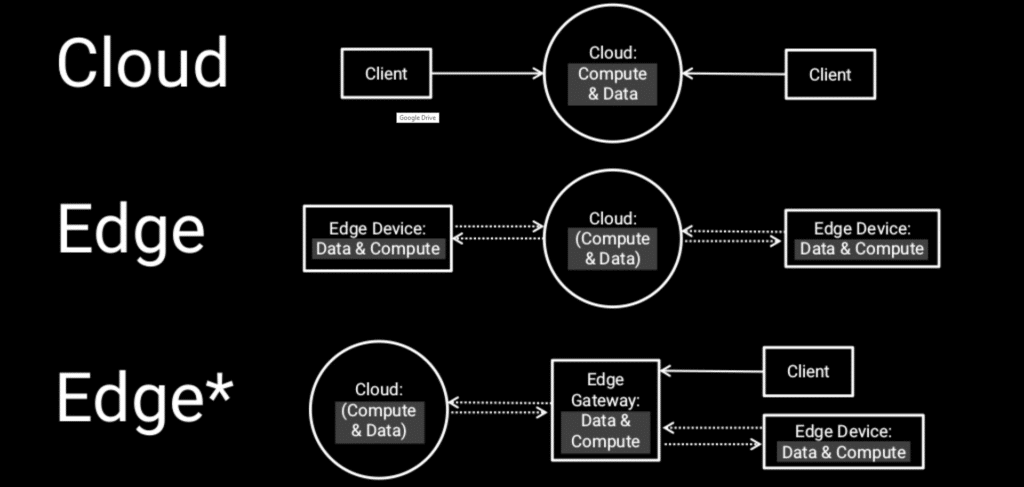

Edge Computing

Edge computing (local data storage and data sync) can help solve these challenges. Deploying local persistence on every type of tolling station, i.e. open and static stations, as well as on the central server allows to meet the near-realtime requirements, heighten uptimes (offline, flaky networks), and last not least meet privacy regulations. From a technical point of view, a solution that supports all platforms and operating systems, is the most efficient approach to ensure edge persistence and data harmony across devices.

Edge database and Sync are the center piece for efficient car tolling solutions

There are a couple of edge databases out there, but out-of-the-box data synchronization solutions are very rare. A fast edge database that reliably persists the needed data and supports fast lookups is essential. Data synchronization guarantees that the vehicle data in the internal stations’ memory is always up-to-date with the central server, so the station will make a decision based on the most accurate data every time. Additionally, the other systems involved in the tolling infrastructure consistently receive the most recent information with no further effort required.

Deploying such an edge persistence and data sync solution mitigates the losses of station shutdowns and Internet connection issues are not a problem anymore. The stations’ operating company also no longer looses violator’s information due to technical reasons.

Summary – Car tolling is moving to the edge

As this case study shows, the use of edge computing is a perfect fit for modern infrastructure. In the context of car tolling, speed, reliable data storage and synchronization are indispensable, resulting in ObjectBox being an effective solution for today’s and future technological advancements.

If you are interested in learning more, feel free to get in touch with us! We appreciate any kind of feedback.