Why Edge Computing is More Relevant in 2021 Than Ever

The world has been forced to digitize more quickly and to a greater extent in 2020 and 2021. COVID has created the need to remodel how work, socializing, production, entertainment, and supply chains function. Despite decades of digitization efforts, with the pandemic upon us, digitization challenges have become transparent. Many companies and countries realize now, they have fallen behind. And those that have not yet digitized were hit hardest by the pandemic. [1] With people leaning heavily on online digital solutions, internet infrastructure is at its capacity limit. [2] Accordingly, users are seeing broadband speeds drop by as much as half. [3] In Europe, governments even requested to reduce the quality of Netflix, Amazon Prime, Youtube and other streaming services to improve network speed. [4]

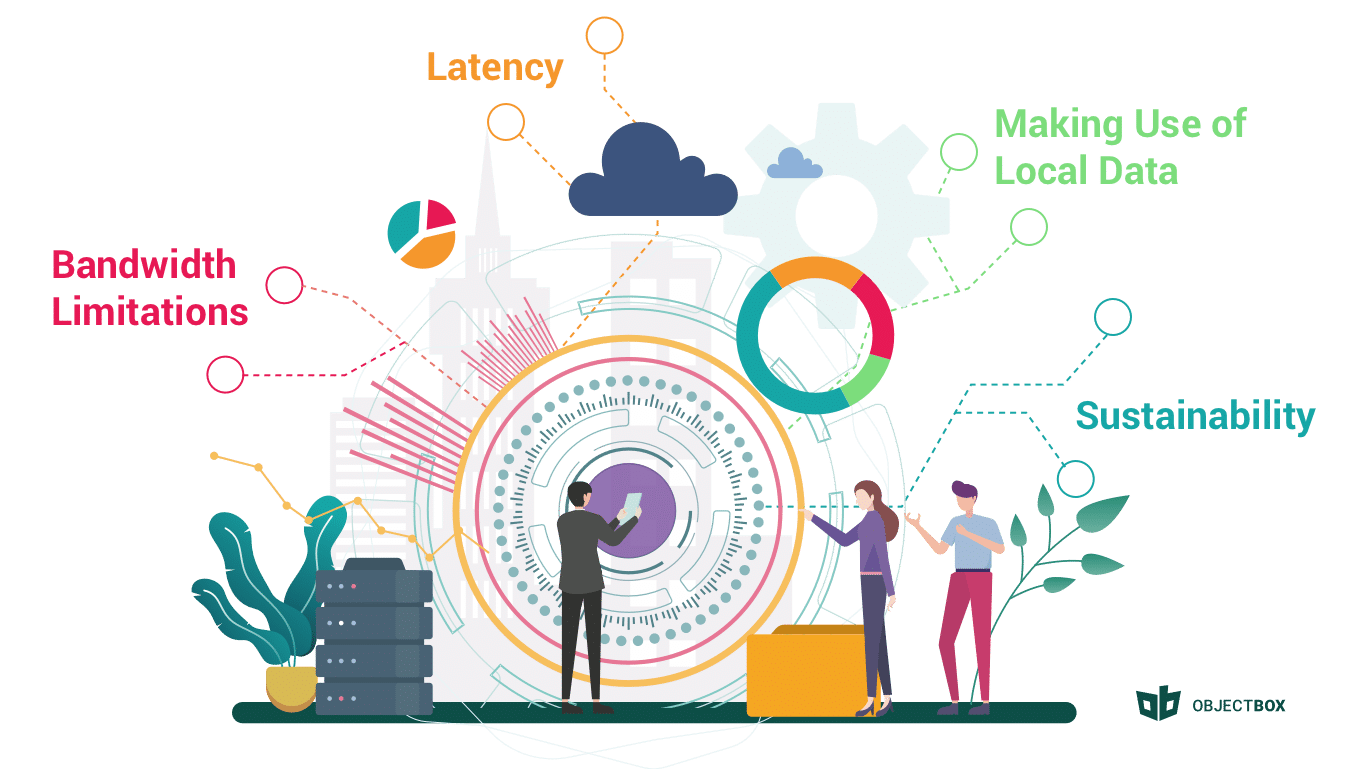

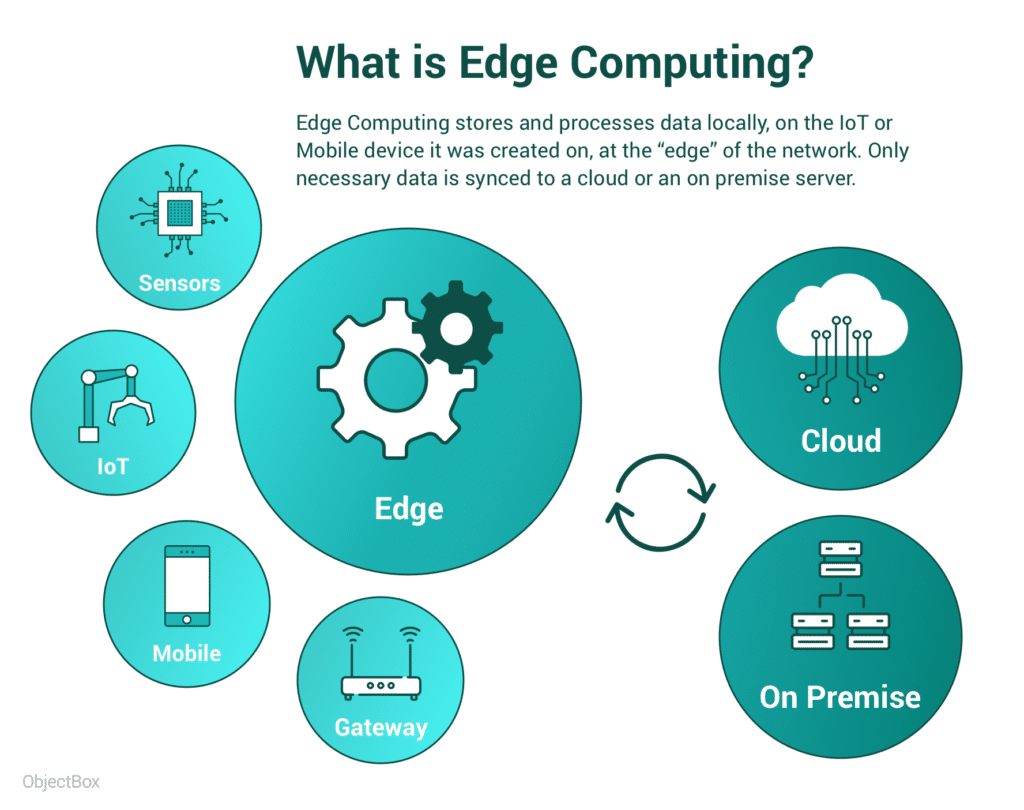

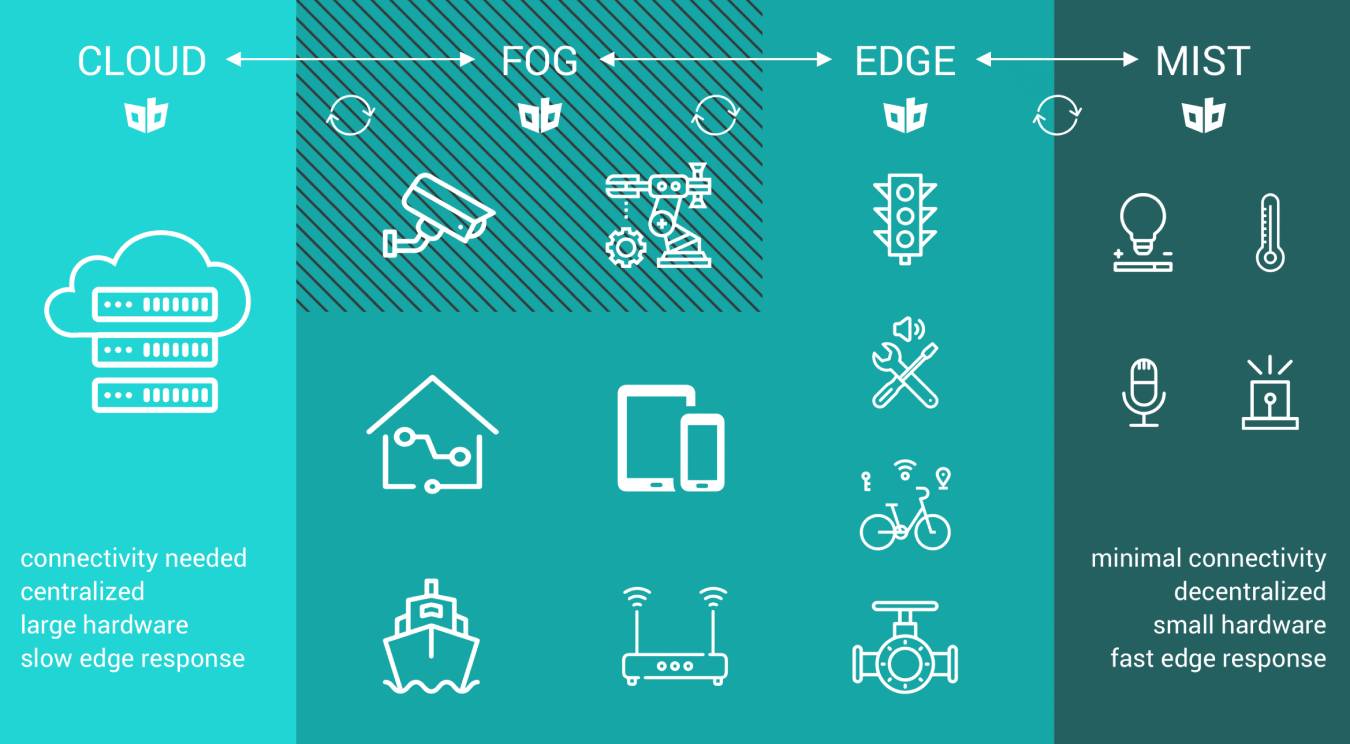

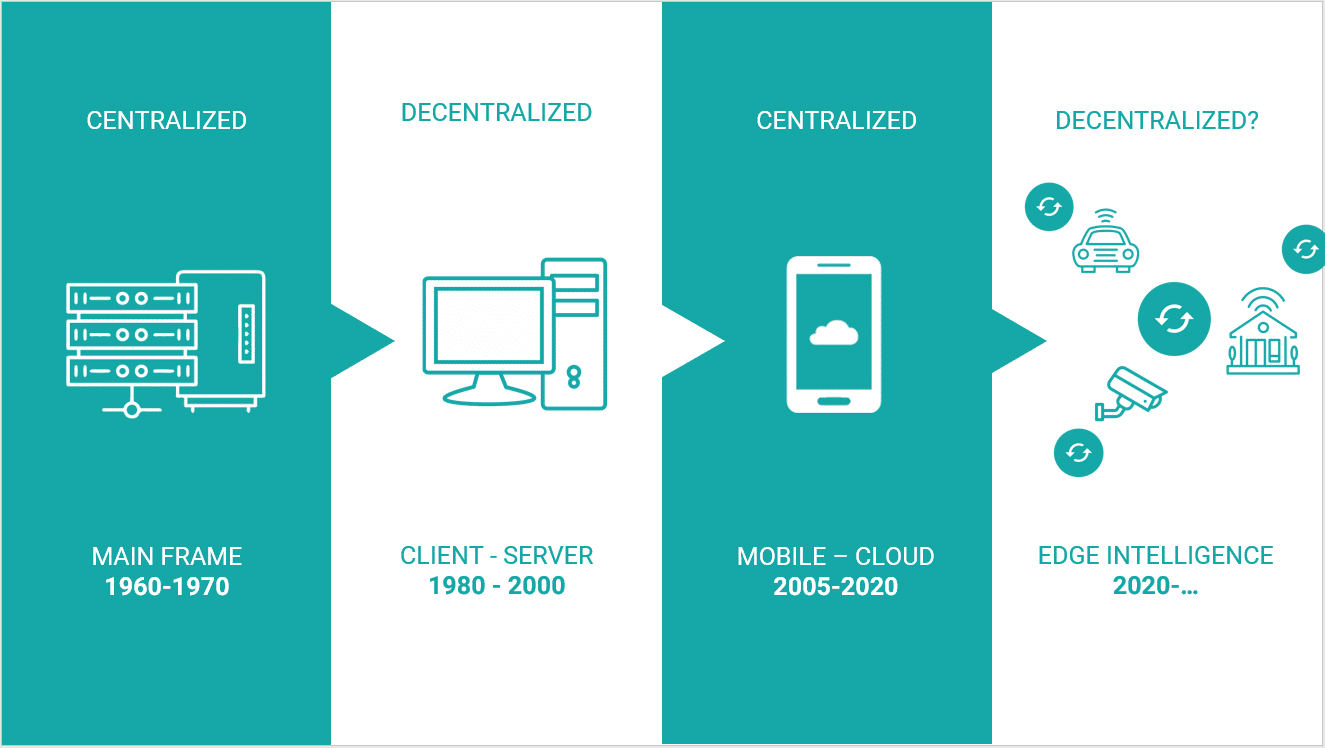

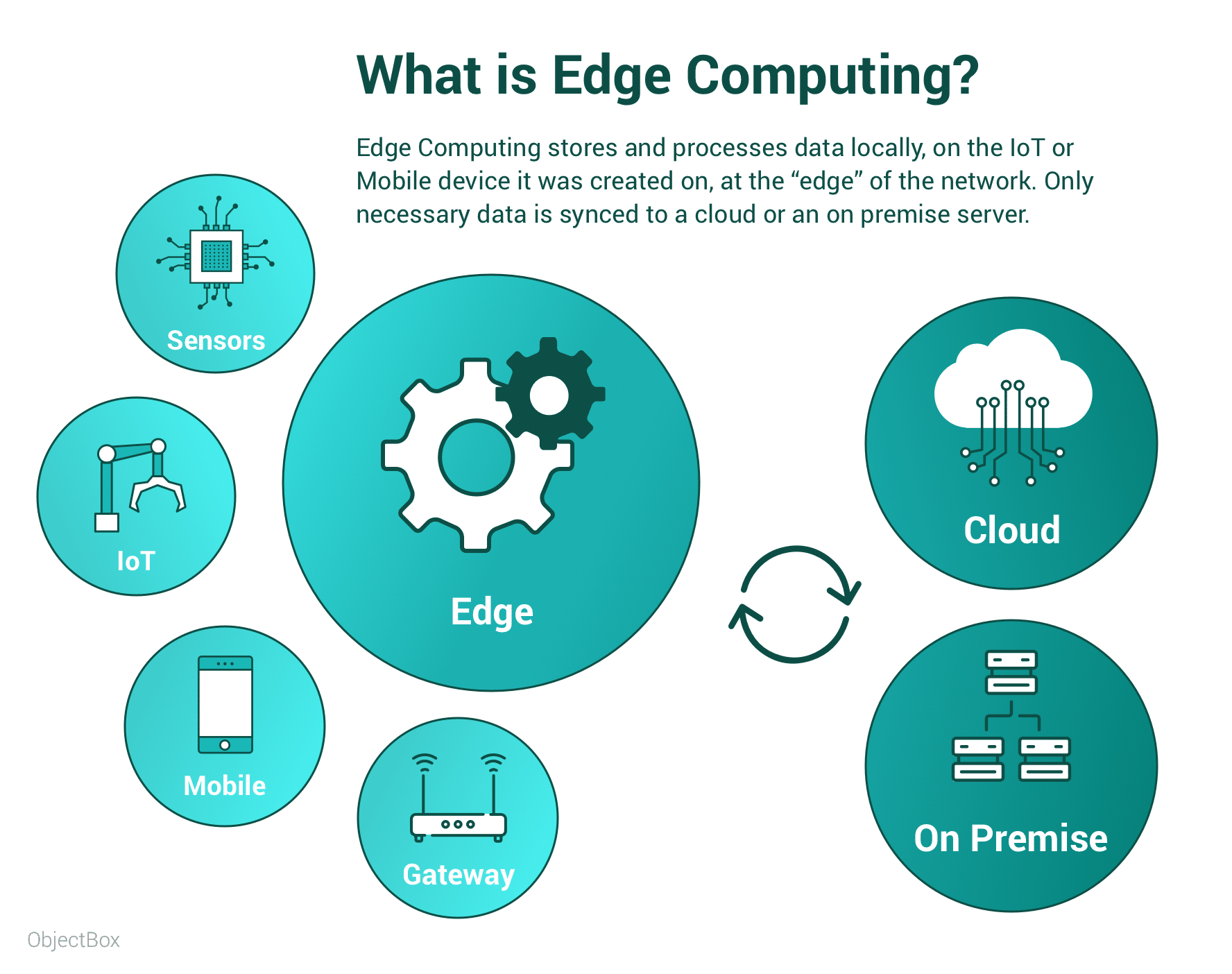

These challenges demonstrate the growing need for an alternative to cloud computing. Cloud computing is an inherently centralized computing paradigm. Edge Computing is a decentralized topology that is based on keeping data local, at the ‘edge’ of the network, as close to the source as possible. Edge Computing is ideal for applications that are data-intensive, have high latency-requirements, or need to work offline, independant from a cloud connection. Using data on the edge, directly on or near the source of the data, not only increases the efficiency and speed of data use, but it reduces unecessary network burden and data traffic waste.

Coronavirus accelerates the need to digitize

It was clear even before the outbreak that internet infrastructure was struggling to keep up with growing data volumes. However, the pandemic has made broadband limitations more apparent to everyday users.

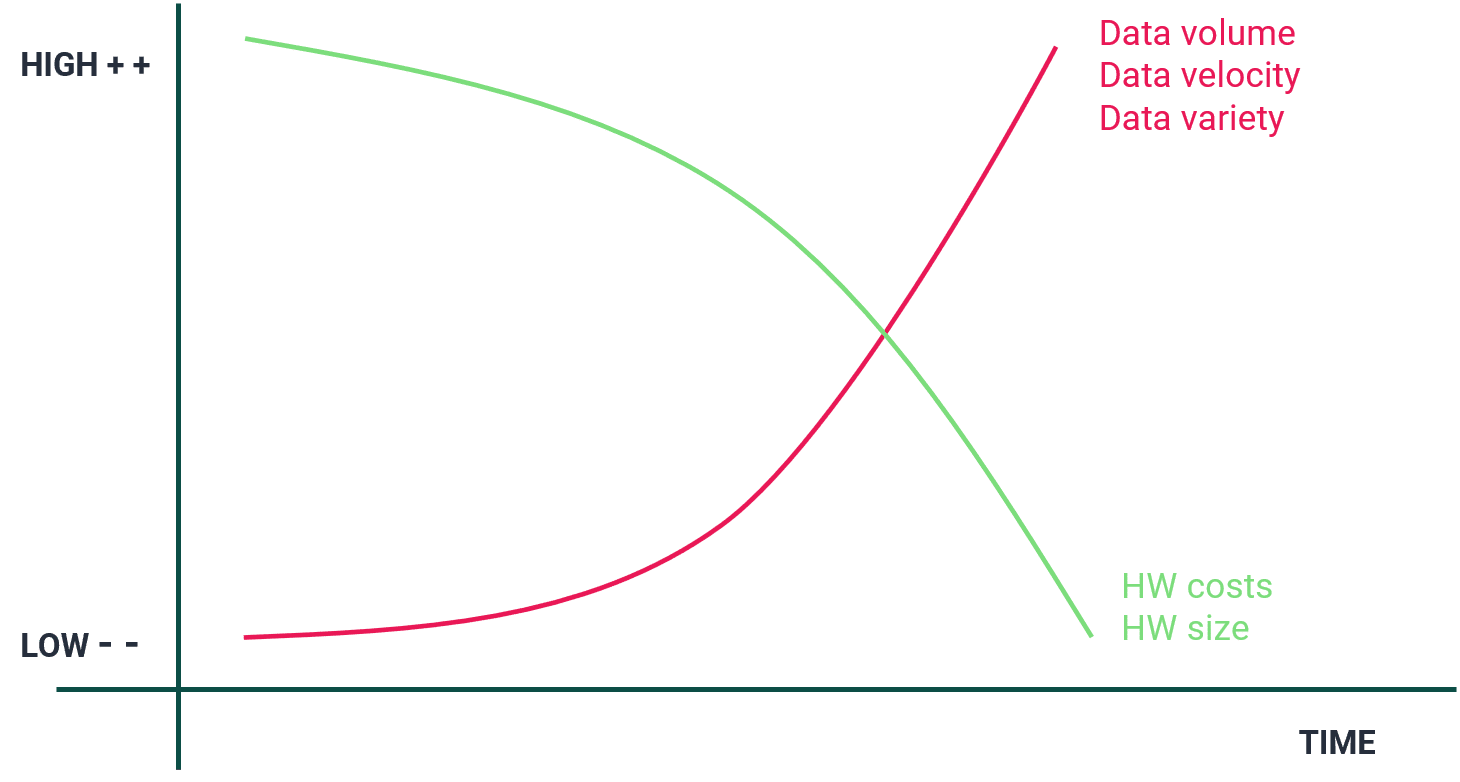

Projections estimate that by 2025 there will be 20 million IoT devices [5] and 1.7MB of data created per second per person. It is slow, expensive, and wasteful to send all of this data to the cloud for storage and processing. This practice overburdens bandwidth and data center infrastructure. It makes projects expensive and unsustainable. Working with the data, locally, on the edge, where it was produced and is used, is more efficient than sending everything to the cloud and back. It brings reduced latency, reduced cloud usage and costs, independence from a network connection, more secure data and heightened data privacy – and even reduces CO2. Indeed, prior to the pandemic, edge computing was on the strategic roadmap for over 50% of mobility decision makers. [6]

As the world begins to recover from the coronavirus pandemic, digitization efforts will no doubt increase. We will see intelligent systems implemented across industries and value chains, accelerating innovation and alongside: data volumes and subsequent strain on network bandwidth. Edge computing is a key technology to ensure that this digitalization is both scalable and sustainable.

Edge Computing takes the ‘edge’ off bandwidth strain

What is Edge Computing?

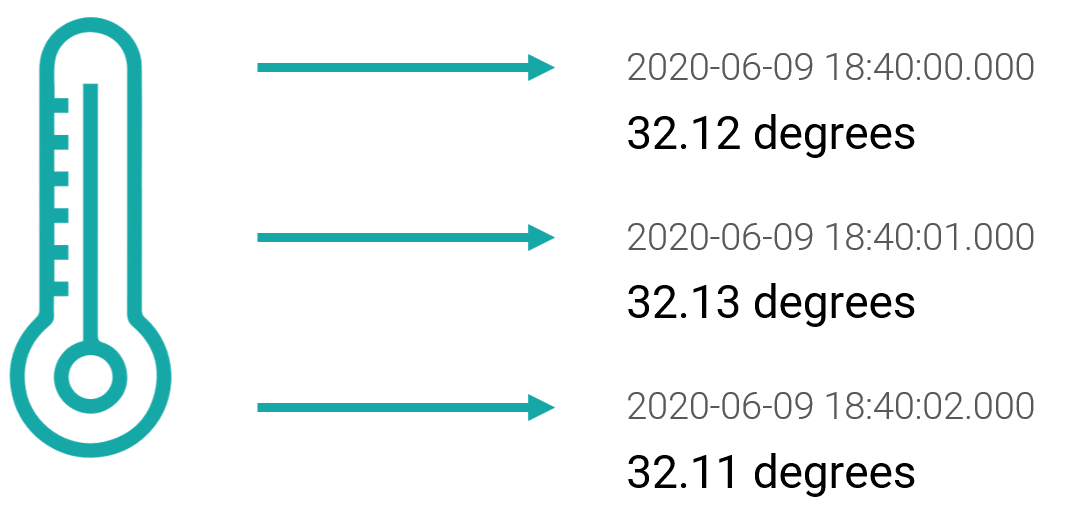

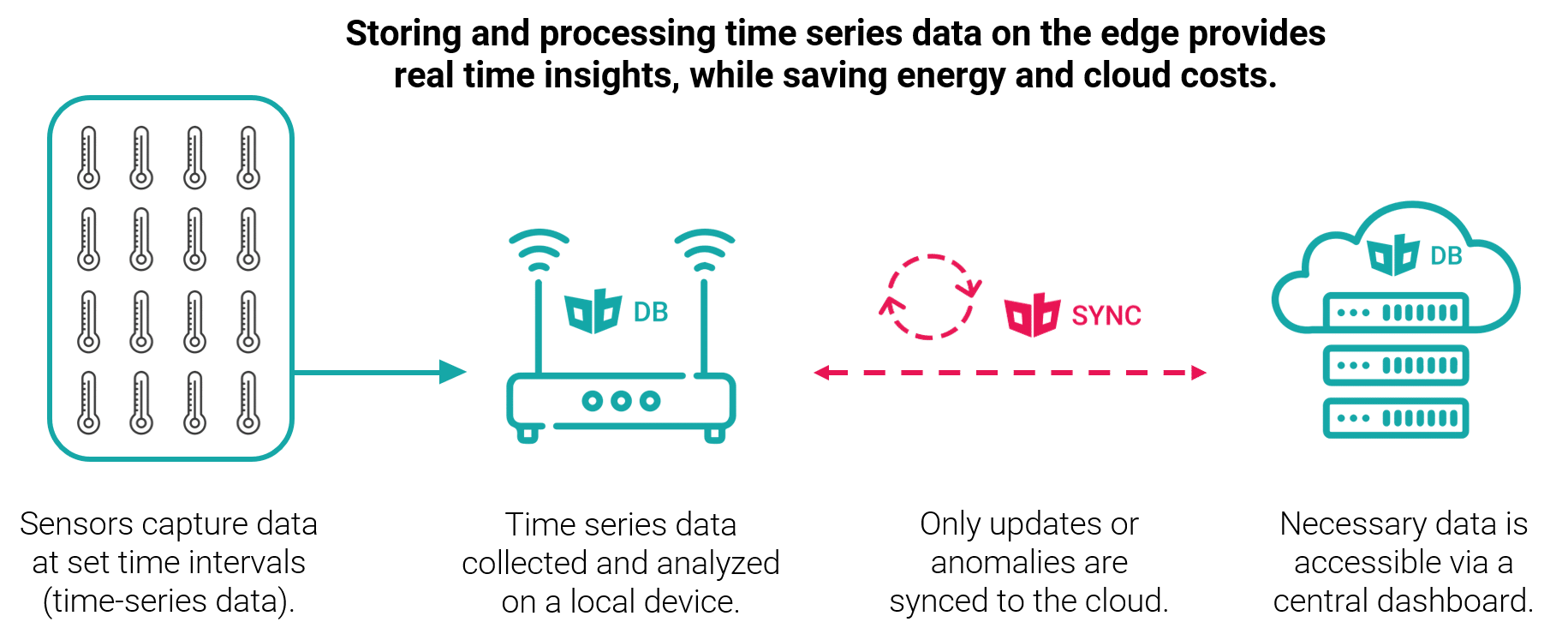

With edge computing, data is stored and used on devices at the “edge” of the network – away from centralized cloud servers. Computing on the edge means that data is stored and used locally, on the device, e.g. a smart phone or IoT device. Edge computing delivers faster decision making, local and offline data processing, as well as reduced data transfer to the cloud (e.g. filtered, computed, extra- or interpolated data), which saves both bandwidth and cloud storage costs.

The Edge complements the Cloud

Although some might set cloud and edge in competition, the reality is that edge computing and cloud computing are both useful and relevant technologies. Both have different strengths and ideal use cases. Together they can provide the best of both worlds: decentralized local storage and processing, making efficient use of hardware on the edge and central storing and processing of some data, enabling additional centralized insights, data backups (redundancy), and remote access. To combine the best of both worlds, relevant and useful data must be synchronized between the edge and cloud in a smart and efficient way.

Edge computing is an ideal technology to reduce the strain on data centers, so those functions that need cloud connection have adequate bandwidth; while those use cases that benefit from reduced latency and offline functionality are optimized on the edge.

The Edge: interface between the Physical and the Digital World

Edge devices handle the interface between the physical world and the cloud, enabling a whole set of new use cases. “Data-driven experiences are rich, immersive and immediate. But they’re also delay-intolerant data hogs”. [8] And therefore need to happen locally, on the edge. We may see edge computing enabling new forms of remote engagement [9], particularly in a post-corona environment.

Edge devices can be anything from a thermostat or small sensor to a fridge or mobile phone or car – and they are part of our direct physical world and use data from their local environment to enable new use cases. Think self-stocking fridges, self-driving cars, drone-delivered pizzas. In the same way, Edge Computing is the key to the first real world search engine. I am waiting for it every day: “Hey Google, where are my keys?” Within a location like a house, the concepts and technologies to enable such a real-world search engine are all clear and available – it is just a matter of time and ongoing digitization. The basis will need to be a fast and sustainable edge infrastructure.

Sustainability on the Edge

Centralized data centers consume a lot of energy, produce a lot of carbon emissions and cause significant electronic waste. [10] While data centers are seeing a positive trend towards using green data centers, an even more sustainable approach is to cut unnecessary cloud traffic, central computation and storage as much as possible by shifting computation to the edge. Edge Computing strategies that harness the power of already deployed available hardware (like e.g. smartphones, machines, desktops, gateways) make the solution even more sustainable.

Intelligent Edge: AI and Edge advance hand in hand

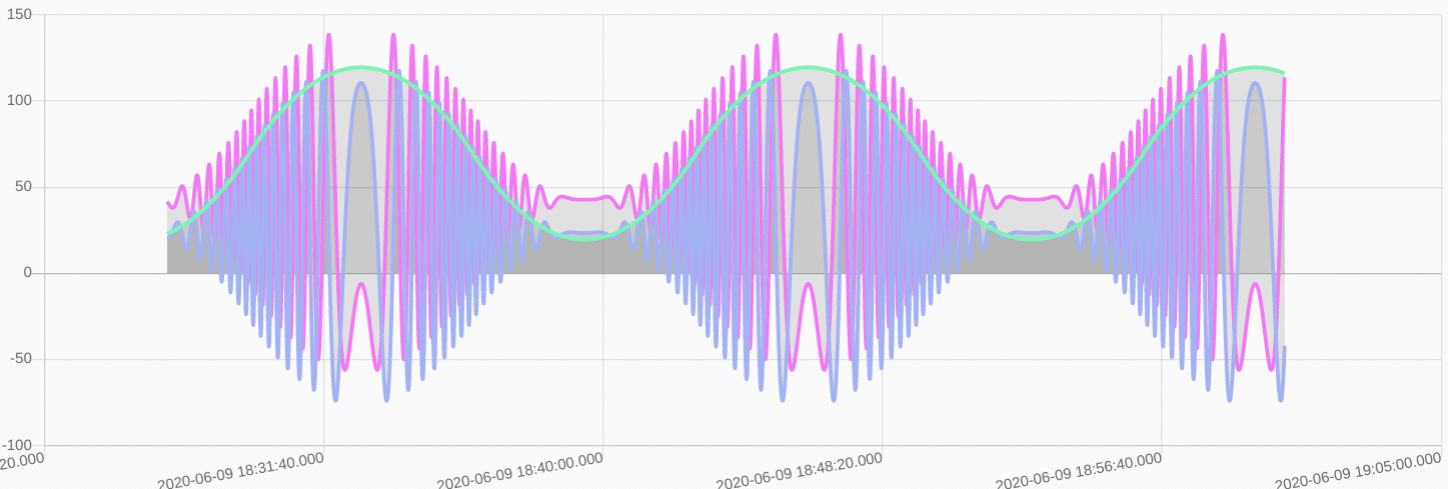

The growth of Artificial Intelligence (AI) and the Edge will go hand in hand. As more and more data is generated at the edge of the network, there will be a greater demand for intelligent data processing and structured optimization to reduce raw data loads going to the cloud. [11] Edge AI will have the power to work with data on local devices, keeping data streams more useful and usable. In the near future, Machine Learning applications will have the ability to learn and create unique, localized, decentralized insights on the edge – based on local inputs.

“With Edge AI, personalization features that we want from the app can be achieved on device. Transferring data over networks and into cloud-based servers allows for latency. At each endpoint, there are security risks involved in the data transfer”. [12] Which is part of the reason why the Edge AI Software market is forecasted to reach 1.12 trillion dollars volume by 2023. The development of AI accelerators, which improve model inferencing on the edge, namely from NVIDIA, Intel and Google are helping to make AI on the edge more viable. [13] A fast edge database is a necessary base technology to enable more AI on the edge.

Edge Computing – an answer to Data Privacy concerns and a need for Resilience

As data collection grows in both breadth and depth, there is a stronger need for data privacy and security. Edge computing is one way to tackle this challenge: keeping data where it is produced, locally, makes data ownership clear and data less likely to be attacked and compromised. If compromised, the data compromised is clearly defined, making notification and subsequent actions manageable. ObjectBox, in its core and as an edge technology, is designed to keep data private, on those devices it was created on, and only share select data as needed.

The more our private and working lives as well as the larger economy depend on digitalization, the more important it is that systems, underlying computing paradigms as well as networks have strong resilience and security. In computer networking, resilience is the ability to “provide and maintain an acceptable level of service in the face of faults and challenges to normal operation.” [14]

ing initEdge Computing shifts computer workloads – the collection, processing, and storage of data – from central locations (like the cloud) to the edge of the networks to many individual devices such as cell phones. Accordingly, any strain is distributed to many devices. Therefore, the risk of a total breakdown is reduced: If one device does not work anymore, the rest is still working. Depending on the setup, the individual devices could even compensate for devices that have a problem.

The same applies to security risks: Even if data from one device is compromised, all other data sets are still safe; the loss is thus very limited and clear. Overall, as a complement to the cloud, edge computing provides improved strength and security in local networks around the world. These local infrastructures can relieve the pressure on the existing complex dependencies, and in turn make the wider system more resilient and flexible. With Edge Computing crisis response can therefore in all likelihood be faster, better informed, and more effective. [15]

Why Corona-Tracking-Apps need to work on the edge

There was initially quite some debate about taking a centralized versus decentralized approach to Corona-Tracking-Apps. [16] Many people were worried about their data. Edge Computing – storing most parts of the data locally, on the user’s device – is a great way to avoid unnecessary data sharing and keep data ownership clear. At the same time, data is by and large much more secure and less likely to be attacked and hacked, as the data to be gained is very reduced. An intelligent syncing mechanism like ObjectBox Sync ensures that the data which needs to be shared, is shared in a selective, transparent and secure way.

The next few years will see big cultural changes in both our personal and professional lives – a portion of those changes will be driven by increased digitalization. Edge computing is an important paradigm to ensure these changes are sustainable, scalable, and secure. Ultimately, we have the chance to rise from this crisis with new insights, new innovation, and a more sustainable future.

1. https://www.netzoekonom.de/2020/04/11/die-oekonomie-nach-corona-digitalisierung-und-automatisierung-in-hoechstgeschwindigkeit/

2. https://www.cnet.com/news/coronavirus-has-made-peak-internet-usage-into-the-new-normal/

3. https://www.nytimes.com/2020/03/26/business/coronavirus-internet-traffic-speed.html

4. https://www.theverge.com/2020/3/27/21195358/streaming-netflix-disney-hbo-now-youtube-twitch-amazon-prime-video-coronavirus-broadband-network

5. https://www.gartner.com/imagesrv/books/iot/iotEbook_digital.pdf

6. https://www.forbes.com/sites/forrester/2019/12/02/predictions-2020-edge-computing-makes-the-leap/#1aba50104201

7. https://www.gartner.com/smarterwithgartner/what-edge-computing-means-for-infrastructure-and-operations-leaders/

8. https://www.iotworldtoday.com/2020/03/19/ai-at-the-edge-still-mostly-consumer-not-enterprise-market/

9. https://www.accenture.com/us-en/insights/high-tech/edge-processing-remote-viewership

10. https://link.springer.com/article/10.1007/s12053-019-09833-8

11. https://www.forbes.com/sites/cognitiveworld/2020/04/16/edge-ai-is-the-future-intel-and-udacity-are-teaming-up-to-train-developers/#232c8fab68f2

12. https://www.forbes.com/sites/cognitiveworld/2020/04/16/edge-ai-is-the-future-intel-and-udacity-are-teaming-up-to-train-developers/#232c8fab68f2

13. https://www.forbes.com/sites/janakirammsv/2019/07/15/how-ai-accelerators-are-changing-the-face-of-edge-computing/#2c1304ce674f

14. https://en.wikipedia.org/wiki/Resilience_(network)

15. https://www.coindesk.com/how-edge-computing-can-make-us-more-resilient-in-a-crisis

16. https://venturebeat.com/2020/04/13/what-privacy-preserving-coronavirus-tracing-apps-need-to-succeed/