by Vivien | Nov 11, 2024 | Edge AI, Edge Database, Release, vector database

After 6 years and 21 incremental “zero dot” releases, we are excited to announce the first major release of ObjectBox, the high-performance embedded database for C++ and C. As a faster alternative to SQLite, ObjectBox delivers more than just speed – it’s object-oriented, highly efficient, and offers advanced features like data synchronization and vector search. It is the perfect choice for on-device databases, especially in resource-constrained environments or in cases with real-time requirements.

What is ObjectBox?

ObjectBox is a free embedded database designed for object persistence. With “object” referring to instances of C++ structs or classes, it is built for objects from scratch with zero overhead — no SQL or ORM layer is involved, resulting in outstanding object performance.

The ObjectBox C++ database offers advanced features, such as relations and ACID transactions, to ensure data consistency at all times. Store your data privately on-device across a wide range of hardware, from low-profile ARM platforms and mobile devices to high-speed servers. It’s a great fit for edge devices, iOS or Android apps, and server backends. Plus, ObjectBox is multi-platform (any POSIX will do, e.g. iOS, Android, Linux, Windows, or QNX) and multi-language: e.g., on mobile, you can work with Kotlin, Java or Swift objects. This cross-platform compatibility is no coincidence, as ObjectBox Sync will seamlessly synchronize data across devices and platforms.

Why should C and C++ Developers care?

ObjectBox deeply integrates with C and C++. Persisting C or C++ structs is as simple as a single line of code, with no need to interact with unfamiliar database APIs that disrupt the natural flow of C++. There’s also no data transformation (e.g. SQL, rows & columns) required, and interacting with the database feels seamless and intuitive.

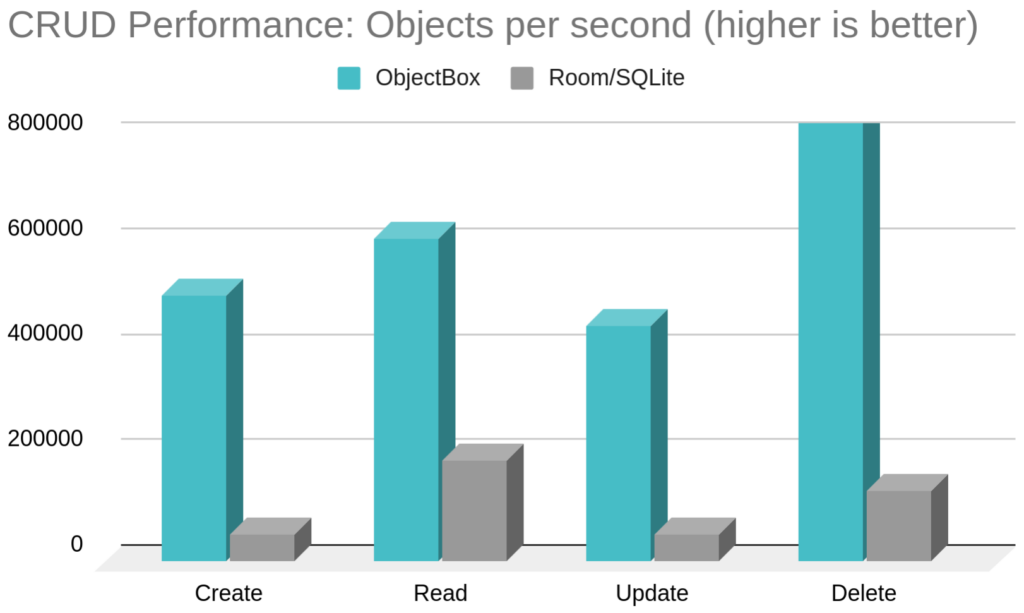

As a C or C++ developer, you likely value performance. ObjectBox delivers exceptional speed (at least we haven’t tested against a faster DB yet). Having several 100,000s CRUD operations per second on commodity hardware is no sweat. Our unique advantage is that, if you want to, you can read raw objects from “mmapped” memory (directly from disk!). This offers true “zero copy” data access without any throttling layers between you and the data.

Finally, CMake support makes integration straightforward, starting with FetchContent support so you can easily get the library. But there’s more: we offer code generation for entity structs, which takes only a single CMake command.

“ObjectBox++”: A quick Walk-Through

Once ObjectBox is set up for CMake, the first step is to define the data model using FlatBuffers schema files. FlatBuffers is a building block within ObjectBox and is also widely used in the industry. For those familiar with Protocol Buffers, FlatBuffers are its parser-less (i.e., faster) cousin. Here’s an example of a “Task” entity defined in a file named “task.fbs”:

|

|

table Task { id: ulong; text: string; } |

And with that file, you can generate code using the following CMake command:

|

|

add_obx_schema(TARGET ${PROJECT_NAME} SCHEMA_FILES tasks.fbs INSOURCE) |

Among other things, code generation creates a C++ struct for Task data, which is used to interact with the ObjectBox API. The struct is a straightforward C++ representation of the data model:

|

|

struct Task { obx_id id; // uint64_t std::string text; }; |

The code generation also provides some internal “glue code” including the method create_obx_model() that defines the data model internally. With this, you can open the store and insert a task object in just three lines of code:

|

|

obx::Store store(create_obx_model()); // Create the database obx::Box<Task> box(store); // Main API for a type obx_id id = box.put({.text = "Buy milk"}); // Object is persisted |

And that’s all it takes to get a database running in C++. This snippet essentially covers the basics of the getting started guide and this example project on GitHub.

Vector Embeddings for C++ AI Applications

Even if you don’t have an immediate use case, ObjectBox is fully equipped for vectors and AI applications. As a “vector database,” ObjectBox is ready for use in high-dimensional vector similarity searches, employing the HNSW algorithm for highly scalable performance beyond millions of vectors.

Vectors can represent semantics within a context (e.g. objects in a picture) or even documents and paragraphs to “capture” their meaning. This is typically used for RAG (Retrieval-Augmented Generation) applications that interact with LLMs. Basically, RAG allows AI to work with specific data, e.g. documents of a department or company and thus individualizes the created content.

To quickly illustrate vector search, imagine a database of cities including their location as a 2-dimensional vector. To enable nearest neighbor search, all you need to do is to define a HNSW index on the location property, which enables the nearestNeighbors query condition used like this:

|

|

float madrid[] {40.416775F, -3.703790F}; obx::Query query = cityBox.query(City_::location.nearestNeighbors(madrid, 2)).build(); std::vector<City> cities = query.findWithScores(); |

For more details, refer to the vector search doc pages or the full city vector search example on GitHub.

store.close(); // Some closing words

This release marks an important milestone for ObjectBox, delivering significant improvements in speed, usability, and features. We’re excited to see how these enhancements will help you create even better, feature-rich applications.

There’s so much to explore! Please follow the links to dive deeper into topics like queries, relations, transactions, and, of course, ObjectBox Sync.

As always, we’re here to listen to your feedback and are committed to continually evolving ObjectBox to meet your needs. Don’t hesitate to reach out to us at any time.

P.S. Are you looking for a new job? We have a vacant C++ position to build the future of ObjectBox with us. We are looking forward to receiving your application! 🙂

by Vivien | Nov 11, 2024 | AI, Data Sync, Edge AI, Edge Computing, Edge Database, Mobile Database, Sync

What is Edge AI? Edge AI (also: “on-device AI”, “local AI”) brings artificial intelligence to applications at the network’s edge, such as mobile devices, IoT, and other embedded systems like, e.g., interactive kiosks. Edge AI combines AI with Edge Computing, a decentralized paradigm designed to bring computing as close as possible to where data is generated and utilized.

What is Cloud AI? As opposed to this, cloud AI refers to an architecture where applications rely on data and AI models hosted on distant cloud infrastructure. The cloud offers extensive storage and processing power.

An Edge for Edge AI: The Cloud

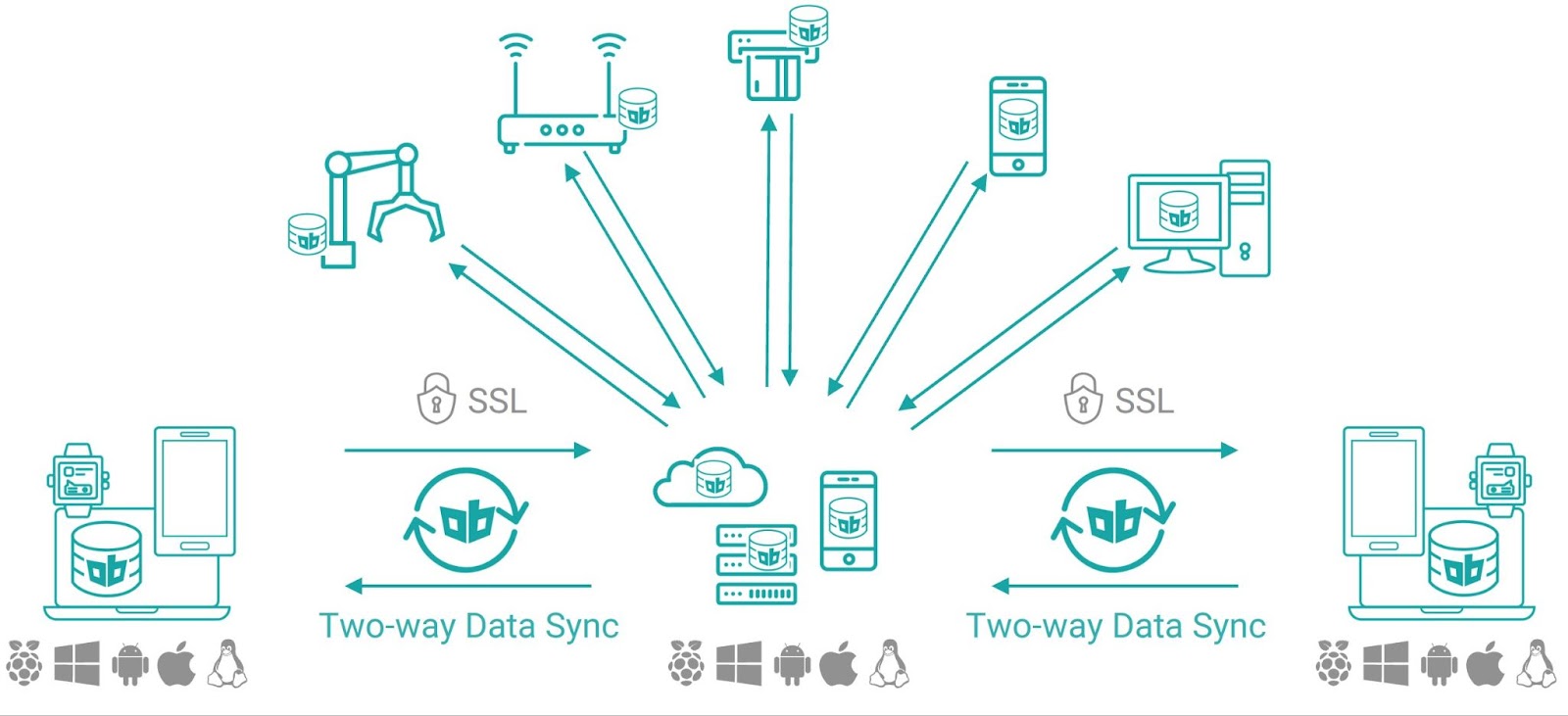

Example: Edge-Cloud AI setup with a secure, two-way Data Sync architecture

Today, there is a broad spectrum of application architectures combining Edge Computing and Cloud Computing, and the same applies to AI. For example, “Apple Intelligence” performs many AI tasks directly on the phone (on-device AI) while sending more complex requests to a private, secure cloud. This approach combines the best of both worlds – with the cloud giving an edge to the local AI rather than the other way around. Let’s have a look at the advantages on-device AI brings to the table.

Benefits of Local AI on the Edge

- Enhanced Privacy. Local data processing reduces the risk of breaches.

- Faster Response Rates. Processing data locally cuts down travel time for data, speeding up responses.

- Increased Availability. On-device processing makes apps fully offline-capable. Operations can continue smoothly during internet or data center disruptions.

- Sustainability/costs. Keeping data where it is produced and used minimizes data transfers, cutting networking costs and reducing energy consumption—and with it, CO2 emissions.

Challenges of Local AI on the Edge

- Data Storage and Processing: Local AI requires an on-device database that runs on a wide variety of edge devices (Mobile,IoT, Embedded) and performs complex tasks such as vector search locally on the device with minimal resource consumption.

- Data Sync: It’s vital to keep data consistent across devices, necessitating robust bi-directional Data Sync solutions. Implementing such a solution oneself requires specialized tech talent, is non-trivial and time-consuming, and will be an ongoing maintenance factor.

- Small Language Models: Small Language Models (SLMs) like Phi-2 (Microsoft Research), TinyStories (HuggingFace), and Mini-Giants (arXiv) are efficient and resource-friendly but often need enhancement with local vector databases for better response accuracy. An on-device vector database allows on-device semantic search with private, contextual information, reducing latency while enabling faster and more relevant outputs. For complex queries requiring larger models, a database that works both on-device and in the cloud (or a large on-premise server) is perfect for scalability and flexibility in on-device AI applications.

On-device AI is revolutionizing numerous sectors by enabling real-time data processing wherever and whenever it’s needed. It enhances security systems, improves customer experiences in retail, supports predictive maintenance in industrial environments, and facilitates immediate medical diagnostics. On-device AI is essential for personalizing in-car experiences, delivering reliable remote medical care, and powering personal AI assistants on mobile devices—always keeping user privacy intact.

Personalized In-Car Experience: Features like climate control, lighting, and entertainment can be adjusted dynamically in vehicles based on real-time inputs and user habits, improving comfort and satisfaction. Recent studies, such as one by MHP, emphasize the increasing consumer demand for these AI-enabled features. This demand is driven by a desire for smarter, more responsive vehicle technology.

Remote Care: In healthcare, on-device AI enables on-device data processing that’s crucial for swift diagnostics and treatment. This secure, offline-capable technology aligns with health regulations like HIPAA and boosts emergency response speeds and patient care quality.

Personal AI Assistants: Today’s personal AI assistants often depend on the cloud, raising privacy issues. However, some companies, including Apple, are shifting towards on-device processing for basic tasks and secure, anonymized cloud processing for more complex functions, enhancing user privacy.

ObjectBox for On-Device AI – an edge for everyone

The continuum from Edge to Cloud

ObjectBox supports AI applications from Edge to cloud. It stands out as the first on-device vector database, enabling powerful Edge AI on mobile, IoT, and other embedded devices with minimal hardware needs. It works offline and supports efficient, private AI applications with a seamless bi-directional Data Sync solution, completely on-premise, and optional integration with MongoDB for enhanced backend features and cloud AI.

Interested in extending your AI to the edge? Let’s connect to explore how we can transform your applications.

by Vivien | Oct 21, 2024 | Android, Edge Computing, Edge Database, Mobile Database, Sync

In today’s fast-paced, decentralized world valuable data is generated by everything, everywhere, and all at once. To harness the vast opportunities offered by this data for data-driven organizations and AI applications, you need to be able to access the data and seamlessly distribute it to when and where it’s needed.

The key to achieving this lies in efficient, offline-first on-device data storage, reliable bi-directional data sync, and a scalable data management backend in the cloud. In other words, you need the infrastructure to manage data flows bi-directionally to tap into fresh data throughout your organization, processes, and applications at the right time.

Together, MongoDB and ObjectBox provide developers with a robust solution to empower seamless workload and data flows on the edge and from the edge to the cloud. ObjectBox seamlessly syncs data bi-directionally across devices even without Internet and syncs back to the cloud and MongoDB when connected. With ObjectBox devices stay in sync also in environments with intermittent connectivity, high latency, or flaky networks. Capture and unlock the value of all your data, anytime, anywhere, without relying on a constant Internet connection, with MongoDB + ObjectBox.

Seamless Offline-First Data Sync for Edge Devices

Maintaining service continuity is essential, even when devices are offline. Your customers, users, operations, and employees need to be able to rely on essential data at all times. That’s where ObjectBox comes in. It comprises of two key components: the ObjectBox Database and ObjectBox Data Sync.

The ObjectBox Database is a lightweight, on-device solution that is highly resource-efficient and fast on restricted hardware like mobile, IoT, and embedded devices, and even in the cloud.

ObjectBox Data Sync enables seamless bi-directional data synchronization between devices. By handling only incremental changes in a compressed binary format, ObjectBox Sync ensures minimal data transfer, automatic conflict resolution, and a seamless user experience even in fluctuating network conditions. This approach effectively simplifies the development process by offering complex sync logic via easy native-language APIs, allowing developers to focus on core app functionality.

Once a connection is available, ObjectBox Data Sync instantly synchronizes changes with MongoDB, providing real-time, bi-directional data sync between edge devices and MongoDB’s robust cloud backend.

The Benefits of Offline-First and Real-Time Data Sync with MongoDB and ObjectBox:

- Resource-efficiency & Highspeed: ObjectBox excels at consuming minimal computational resources (CPU, power, memory, …) while delivering data persistence speed that is typically on-par with in-memory caches for read operations.

- Offline-First Operation: Ensure continuous app performance, even with no internet connection. ObjectBox stores and syncs data bi-directionally on the edge and additionally with MongoDB once connected.

- Real-Time Data Sync: Get reliable, bi-directional data synchronization across devices and MongoDB, enabling real-time updates and data consistency.

- Scalable Edge: Easily handle 100k operations / second on a single device. Host the Sync server on any edge device (like a phone) and easily handle 3M clients with a three-node cluster.

- Scalable Cloud Backend: With MongoDB, businesses can scale their applications to handle growing data and performance demands, seamlessly syncing data between millions of devices and the cloud.

Flexible Setup Scenarios: Tailor Data Sync to Your Needs

ObjectBox and MongoDB offer flexible setup scenarios to meet different application needs. The two main setup options are the central sync and the edge sync setup.

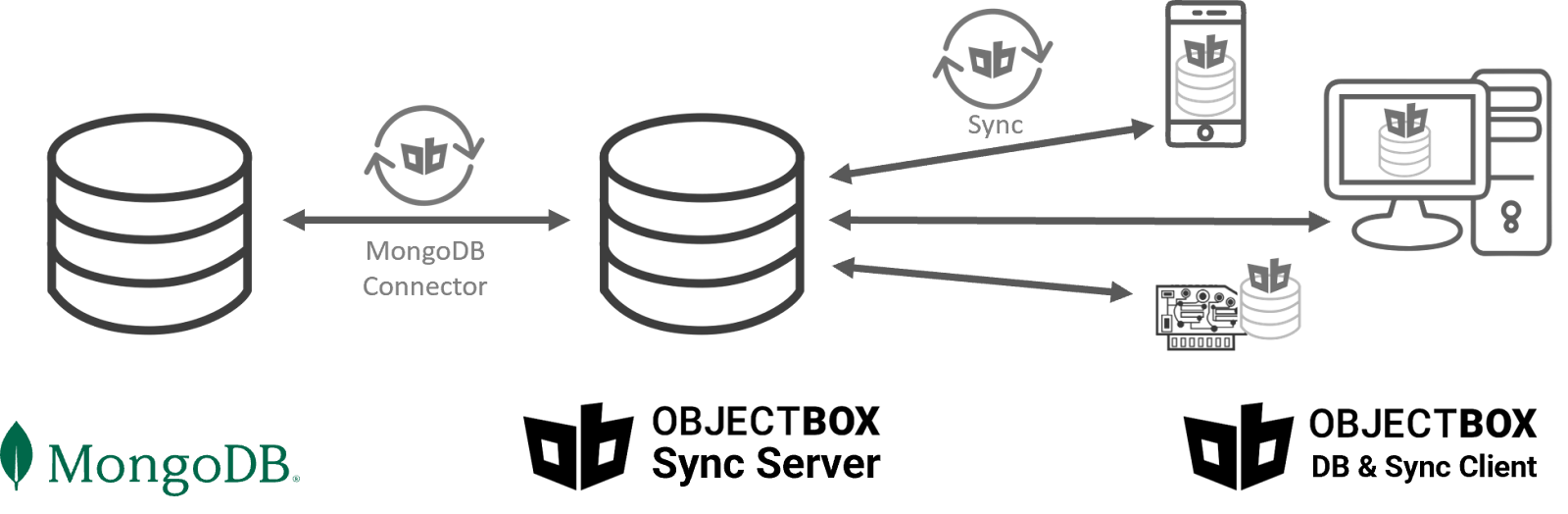

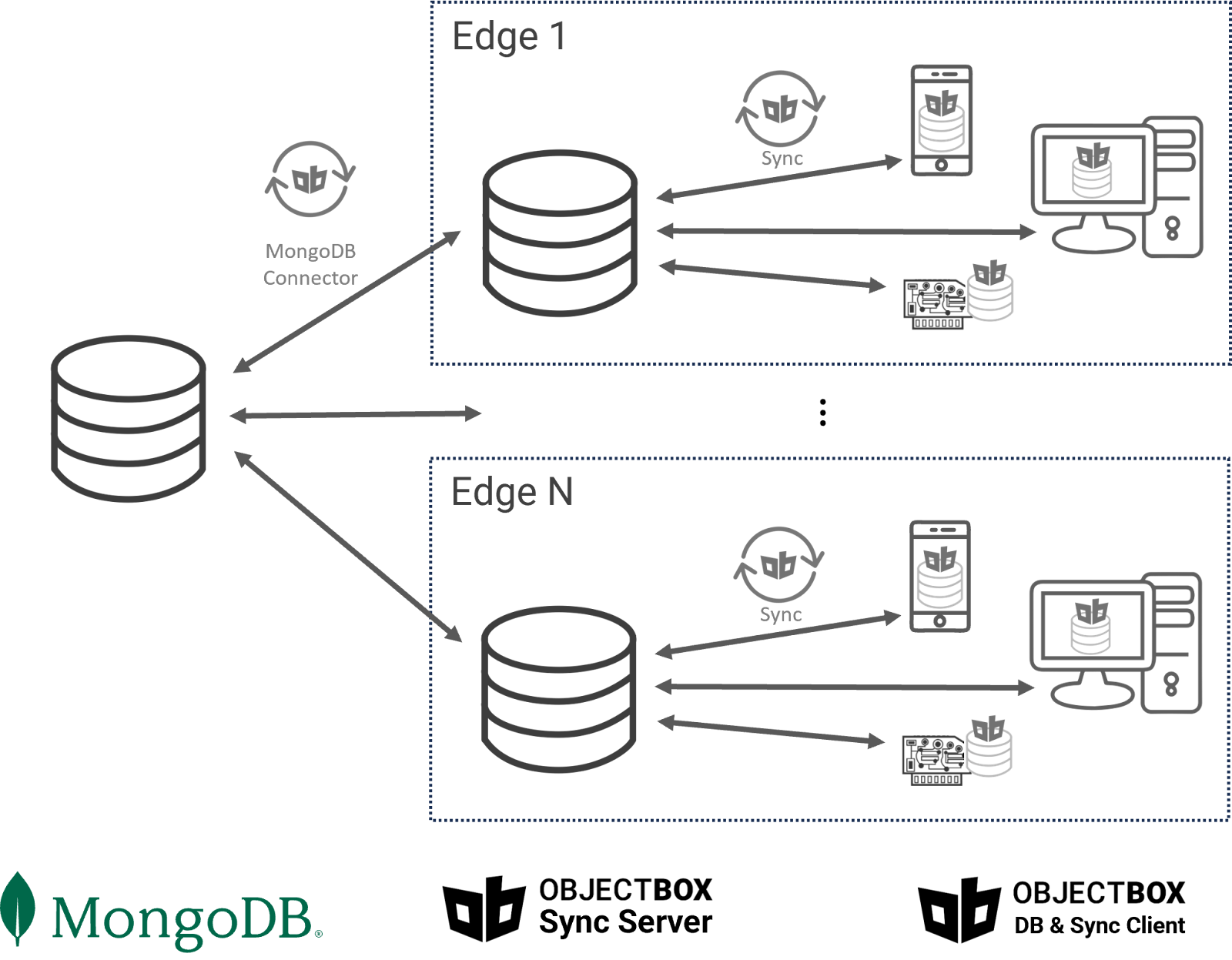

The Central Sync Setup syncs data between edge devices and MongoDB in the cloud, providing centralized data management while retaining offline-first functionality. The ObjectBox Sync Server runs in the cloud or on-premise.

The Edge Sync Setup allows devices to operate and sync data efficiently offline between ObjectBox instances within an edge, e.g. within one location, or within a car. When reconnected, changes are synchronized back to MongoDB making it ideal for environments with intermittent connectivity or distributed devices that need to function independently while syncing back to the cloud when possible.

This structure offers a flexible approach to integrating edge and cloud systems, empowering organizations to choose the setup that best fits their specific use case. More details.

Use Cases for MongoDB + ObjectBox :

- Data-Driven Organizations: In a data-driven organization, every decision relies on access to relevant, up-to-date data. ObjectBox enables real-time data collection and synchronization from edge devices, ensuring access to critical data, even when devices are intermittently connected. This streamlines operations, improves decision-making, and enhances analysis across distributed teams and IoT systems. With MongoDB’s scalable cloud infrastructure, decentralized data integrates seamlessly with the cloud backend for efficient management.

- Point-of-Sale (PoS) & Retail Edge Computing: A seamless customer experience and the ability to keep selling and never lose a transaction, even during internet outages, are essential for PoS systems / in retail. ObjectBox enables offline-first data storage and syncing for PoS systems, allowing transactions to be processed locally, even without internet connectivity. When connectivity returns, ObjectBox syncs transaction data back to MongoDB in real time, ensuring data consistency across multiple locations. Retailers can then leverage MongoDB’s analytics to gain insights into customer behavior and optimize inventory management.

- Software-Defined Vehicle (SDV) & Connected Cars: Modern vehicles generate vast amounts of data from sensors and onboard systems. ObjectBox enables efficient on-device storage and processing, providing real-time access to data for navigation, diagnostics, and infotainment systems. ObjectBox Data Sync ensures that local data is synced back to MongoDB when connectivity is available, supporting centralized analytics, fleet management, and predictive maintenance, optimizing performance and safety while enhancing the user experience.

- Manufacturing & Smart Shopfloor Apps: In smart factories, machines and sensors continuously generate data that must be analyzed and processed in real time. ObjectBox enables local data storage and fast data sync on-premise without the necessity for an Internet connection, ensuring that critical systems that are not connected to the Internet can run smoothly on-site. With a connected instance, ObjectBox takes care of synchronizing this data with the cloud and MongoDB for further analysis and central dashboards.

- AI-Applications with On-device Vector Search: ObjectBox is the first and only on-device vector database, empowering developers to run AI locally on mobile, IoT, embedded, and other commodity devices (Edge AI). In combination with a Small Language Model (SLM), this allows developers to build local AI applications (e.g. RAG, genAI) that run directly on the device—without needing a cloud connection. By syncing with MongoDB, businesses can combine the power of on-device AI with centralized cloud data for even greater insights and performance. This is especially beneficial in scenarios requiring real-time decision-making, such as personalized customer experiences and predictive maintenance.

In today’s data-driven world, a data-first strategy requires seamless integration between edge and cloud data management. The combination of MongoDB and ObjectBox unlocks the full potential of your data. MongoDB’s powerful cloud platform, together with ObjectBox’s efficient on-device database and offline-first capabilities, is ideal for capturing the value of your data from anywhere, including distributed edge devices where valuable data is generated all the time. This partnership empowers businesses to seamlessly handle decentralized data, enabling fast and reliable operations at the edge while syncing back to the cloud for centralized management. Whether on IoT devices, mobile, embedded systems, or commodity hardware, ObjectBox and MongoDB ensure optimal performance everywhere. From remote areas to bad networks, our joint solution keeps data flowing reliably between the edge and the MongoDB backend, even when connectivity or nodes are lost.

by Vivien | Oct 2, 2024 | AI, Edge AI, Edge Computing, Edge Database, Mobile Database, vector database

As artificial intelligence (AI) continues to evolve, companies, researchers, and developers are recognizing that bigger isn’t always better. Therefore, the era of ever-expanding model sizes is giving way to more efficient, compact models, so-called Small Language Models (SLMs). SLMs offer several key advantages that address both the growing complexity of AI and the practical challenges of deploying large-scale models. In this article, we’ll explore why the race for larger models is slowing down and how SLMs are emerging as the sustainable solution for the future of AI.

From Bigger to Better: The End of the Large Model Race

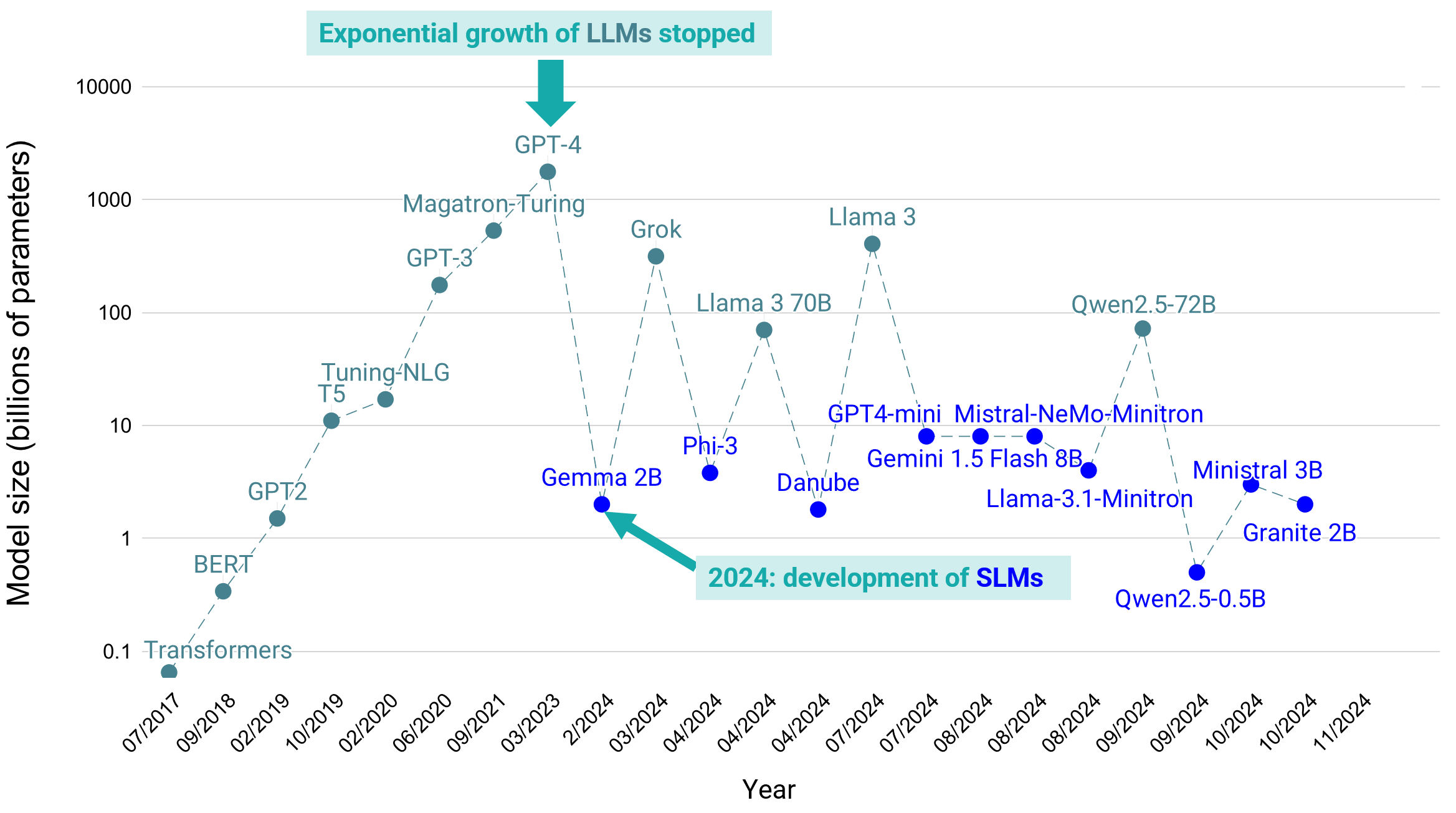

Up until 2023, the focus was on expanding models to unprecedented scales. But the era of creating ever-larger models appears to be coming to an end. Many newer models like Grok or Llama 3 are smaller in size yet maintain or even improve performance compared to models from just a year ago. The drive now is to reduce model size, optimize resources, and maintain power.

The Plateau of Large Language Models (LLMs)

Why Bigger No Longer Equals Better

As models become larger, developers are realizing that the performance improvements aren’t always worth the additional computational cost. Breakthroughs in knowledge distillation and fine-tuning enable smaller models to compete with and even outperform their larger predecessors in specific tasks. For example, medium-sized models like Llama with 70B parameters and Gemma-2 with 27B parameters are among the top 30 models in the chatbot arena, outperforming even much larger models like GPT-3.5 with 175B parameters.

The Shift Towards Small Language Models (SLMs)

In parallel with the optimization of LLMs, the rise of SLMs presents a new trend (see Figure). These models require fewer computational resources, offer faster inference times, and have the potential to run directly on devices. In combination with an on-device database, this enables powerful local GenAI and on-device RAG apps on all kinds of embedded devices, like on mobile phones, Raspberry Pis, commodity laptops, IoT, and robotics.

Advantages of SLMs

Despite the growing complexity of AI systems, SLMs offer several key advantages that make them essential in today’s AI landscape:

Accessibility

As SLMs are less resource-hungry (less hardware requirements, less CPU, memory, power needs), they are more accessible for companies and developers with smaller budgets. Because the model and data can be used locally, on-device / on-premise, there is no need for cloud infatstructure and they are also usable for use cases with high privacy requirements. All in all, SLMs democratize AI development and empower smaller teams and individual developers to deploy advanced models on more affordable hardware.

Cost Reduction and Sustainability

Training and deploying large models require immense computational and financial resources, and comes with high operational costs. SLMs drastically reduce the cost of training, deployment, and operation as well as the carbon footprint, making AI more financially and environmentally sustainable.

On-Device AI for Privacy and Security

SLMs are becoming compact enough for deployment on edge devices like smartphones, IoT sensors, and wearable tech. This reduces the need for sensitive data to be sent to external servers, ensuring that user data stays local. With the rise of on-device vector databases, SLMs can now handle use-case-specific, personal, and private data directly on the device. This allows more advanced AI apps, like those using RAG, to interact with personal documents and perform tasks without sending data to the cloud. With a local, on-device vector database users get personalized, secure AI experiences while keeping their data private.

The Future: Fit-for-Purpose Models: From Tiny to Small to Large Language models

The future of AI will likely see the rise of models that are neither massive nor minimal but fit-for-purpose. This “right-sizing” reflects a broader shift toward models that balance scale with practicality. SLMs are becoming the go-to choice for environments where specialization is key and resources are limited. Medium-sized models (20-70 billion parameters) are becoming the standard choice for balancing computational efficiency and performance on general AI tasks. At the same time, SLMs are proving their worth in areas that require low latency and high privacy.

Innovations in model compression, parameter-efficient fine-tuning, and new architecture designs are enabling these smaller models to match or even outperform their predecessors. The focus on optimization rather than expansion will continue to be the driving force behind AI development in the coming years.

Conclusion: Scaling Smart is the New Paradigm

As the field of AI moves beyond the era of “bigger is better,” SLMs and medium-sized models are becoming more important than ever. These models represent the future of scalable and efficient AI. They serve as the workhorses of an industry that is looking to balance performance with sustainability and efficiency. The focus on smaller, more optimized models demonstrates that innovation in AI isn’t just about scaling up; it’s about scaling smart.